About me

Hello! I am a Research Assistant at USC, fortunate to be advised by Erdem Biyik. Previously, I completed my Master’s in Electrical and Computer Engineering at UC San Diego, where I worked with Hao Su and Pengtao Xie. I also earned a double major Bachelor’s degree in Computer Science and Mathematics from UW–Madison, where I worked with Vikas Singh.

Email: zhl165@ucsd.edu, lijefrrey@gmail.com

My research focuses on developing embodied agents that can perceive, reason, and act in the physical world. I work at the intersection of multimodal learning, robot learning, and preference alignment. Broadly, I am interested in:

- Vision–Language Models: Enhancing the robustness of large vision–language models, with a focus on improving their physical grounding in real-world environments and their applications.

- Efficient Control and Planning: Developing effective policies through imitation learning and reinforcement learning.

- Vision–Language–Action (VLA) Integration: Integrating vision–language–action systems into unified embodied agents

- Human-centered Alignment: Grounding agents’ behaviors in human intentions using feedback, pairwise preferences, and other weak supervision.

Ultimately, my goal is to build interpretable, reliable, and safe embodied agents that generalize beyond curated demonstrations and operate effectively in open-world environments.

🚀 I am currently applying for PhD programs for Fall 2026. You can see my CV.

Publications and Preprints

* indicates equal contribution.

| Bayesian Flow Networks for Robotic Manipulation Zhaoyang Li (First Author) Targeting IROS 2026. | |

| ARCI: Benchmarking Action Robustness under Contextual Incongruity in Vision–Language–Action Models Zhaoyang Li (First Author) Targeting IROS 2026. |

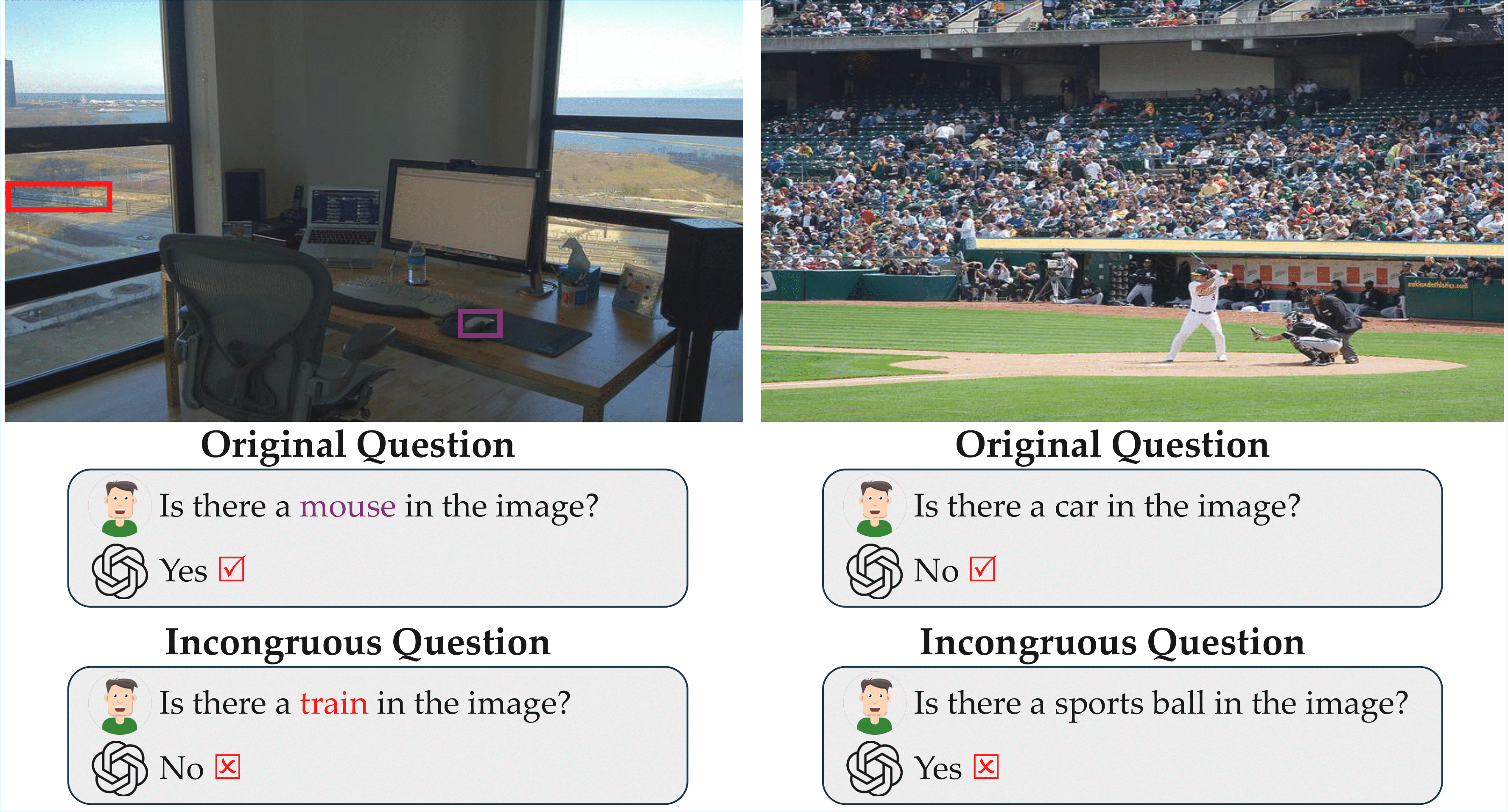

| ORIC: Benchmarking Object Recognition in Incongruous Context for Large Vision-Language Models. Zhaoyang Li*, Zhan Ling*, Yuchen Zhou, Litian Gong, Erdem Bıyık, Hao Su. IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR) 2026. Paper Code |

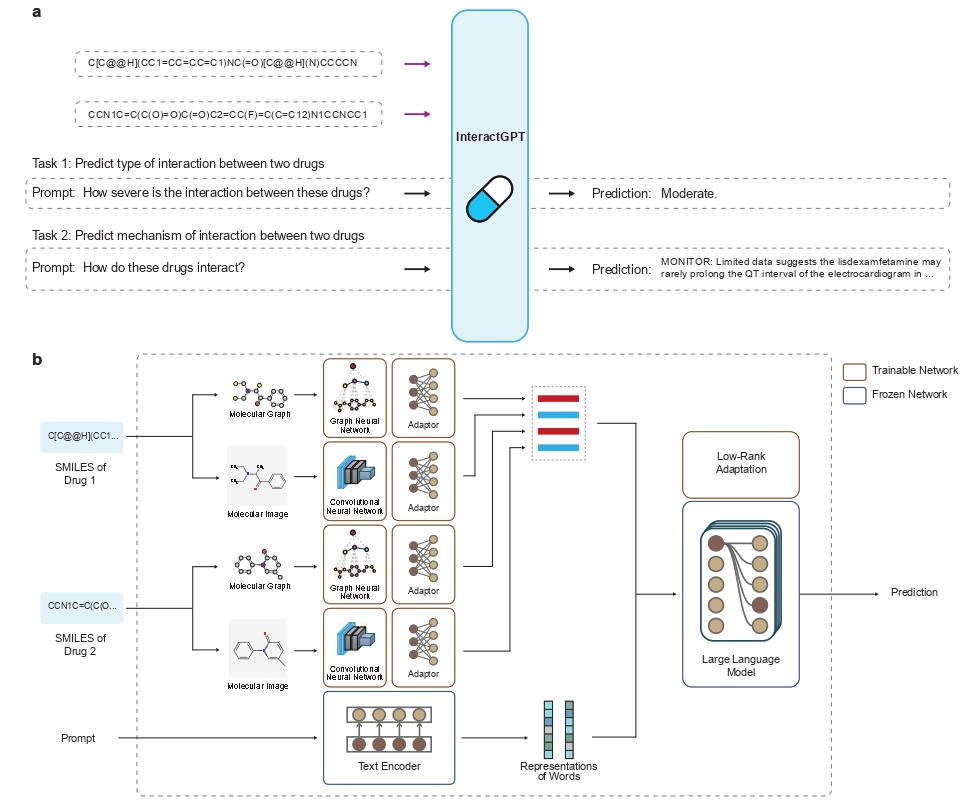

| A Multi-modal Large Language Model for Predicting Mechanisms of Drug Interactions. Zhaoyang Li, Sushaanth Srinivasan, Ninad Ekbote, Pengtao Xie. Submitted to Nature Biomedical Engineering. |

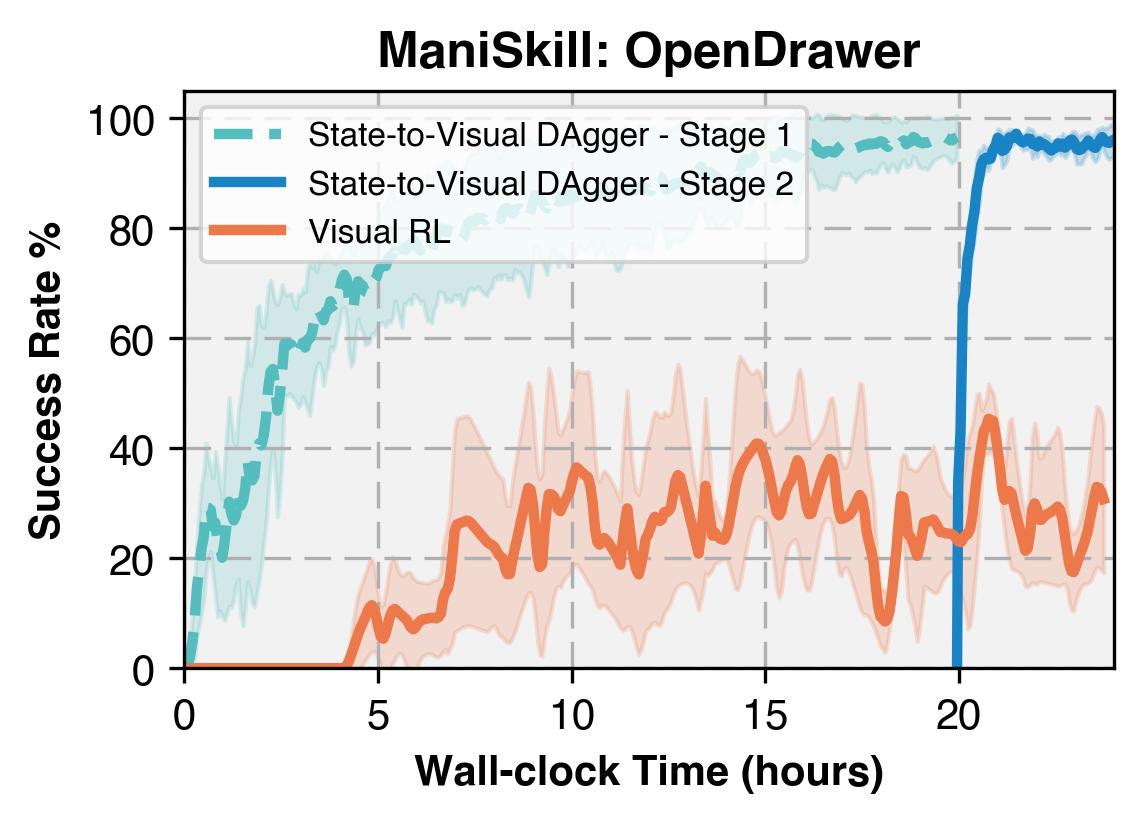

| When Should We Prefer State-to-Visual DAgger Over Visual Reinforcement Learning? Tongzhou Mu*, Zhaoyang Li*, Stanisław Wiktor Strzelecki*, Xiu Yuan, Yunchao Yao, Litian Liang, Aditya Gulati, Hao Su. AAAI Conference on Artificial Intelligence (AAAI) 2025. Paper · Code |

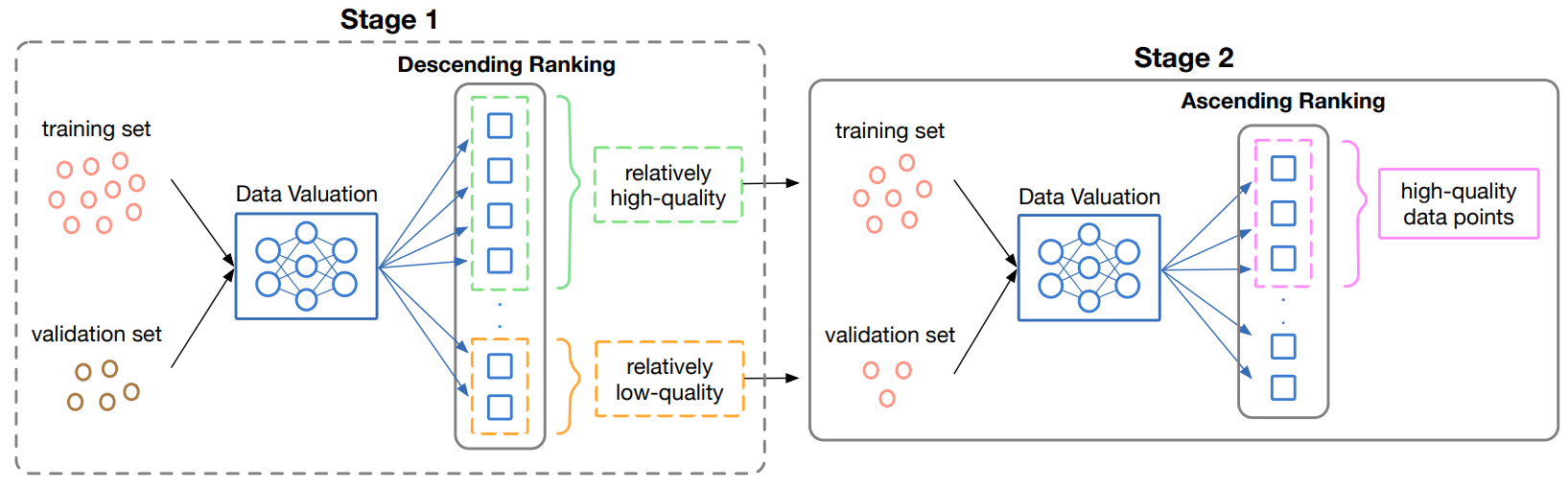

| Just Select Twice: Leveraging Low-Quality Data to Improve Data Selection. Yifei Zhang, Yusen Jiao, Jiayi Chen, Zhaoyang Li, Huaxiu Yao, Jieyu Zhang, Frederic Sala. ATTRIB Workshop at Conference on Neural Information Processing Systems (NeurIPS) 2024; extended version in submission. Paper |

Educations

M.S. in Electrical and Computer Engineering (Intelligent Systems, Robotics & Control)

University of California, San Diego | 09/2023 – 06/2025B.S. in Computer Science & Mathematics (Double Major)

University of Wisconsin–Madison | 01/2021 – 05/2023

Professional Service

- Reviewer, AAAI 2025 Workshop on Large Language Models and Generative AI for Health

- Reviewer, AAAI 2026

Teaching

Teaching Assistant at UW–Madison — Spring 2023

- CS540: Introduction to Artificial Intelligence

Peer Mentor at UW–Madison — Fall 2022

- CS537: Introduction to Operating System